Oily Pine 1 - Building Alpine Packages to test a shell |

||

| Author: Samuel Hierholzer | Date: 04 Oct 2025 | |

I want to share a project I’ve been working on over the last year - I call it Oily Pine.

Goal: Build all Alpine Linux packages with OSH from Oils for Unix as shell. Sounds easy, right?

This is the first in a miniseries of 3 Blog posts:

- Building Alpine Packages to test a shell

- Throwing log-files at ChatGPT?

- Building packages reliably (To be released)

Introduction

Osh - the “compatible” part of the Oils project - is probably the newest POSIX compliant shell out there. It’s both POSIX and Bash compatible.

And it is already very mature. Osh runs real shell programs since 2017. And the Oils repo has a lot of tests, benchmarks and metrics which are published on each release (latest).

So OSH can run some of the biggest publicly available shell scripts. But is this enough?

Especially with shell my experience is that people typically learn a unique style of writing their code and then stick to it. Why try out a feature which could shoot you in the foot, when you can achieve the same behaviour with 5 messy lines of code you know will work when you copy them from your last script, right?

This became very apparent to me when I went through around 50 Nagios checks from “the internet” with my apprentice last year and tried to apply shellcheck to them. We saw vastly different styles and had to use an unexpected amount of #shellcheck disable=...!

So I believe we need to test many different shell scripts, rather than a few big codebases! It will happen automatically when people start adopting it, but until then we need to do that work ourselves.

Searching for Shell Scripts

Shell is a sneaky language. Just recently, I learned that the line VirusEvent mycommand in a Clamav config file will execute the command $USER_SHELL -c 'mycommand' when it finds a Virus. Not just mycommand. This means I could also do something crazy like VirusEvent for i in 1 2 3; do echo $i; done; echo "VIRUS!" (as long as my shell supports this)! Shell is “embedded” like that in many places.

The most prominent and heavy use of this is in Makefiles, which shell out for almost any command:

mytarget:

echo this is shell

secondtarget:

# We can do the same thing as in the Clamav VirusEvent

for i in a b c; do echo $i; done

Therefore, it’s not a far stretch to start building packages when we want to run a lot of shell, because building packages usually involves Makefiles.

Not only are there Makefiles, but also huge configure scripts written (or generated!) in shell. And Alpine Linux “Aports” are even package definitions written in shell! And it turns out there are package testing tools written in shell, too!

So we’ll definitely get a few different styles of shell, when we build packages.

That’s why I started that project to build all Aports using Oils as build shell.

The Setup

Before we can build packages, we need the package definitions. Since Alpine Linux keeps them all in the official Aports repo, we can just clone that repository.

I actually created a fork, to store any custom tooling in the same repository. But to make potential rebasing easy, I created a folder oily in the repository and put everything in there. If you’re curious, you can check it out after finishing this blog (series). :)

Containers

To build Alpine packages, we need to set up a build environment. There are a few build environment options for that:

- A virtual machine with Alpine Linux

- An Alpine container image

- A chroot

Using a container is probably the easiest way to get started, so I picked that.

To build a container image, we need a Containerfile. It’s literally the same as a Dockerfile, but I use podman instead of Docker and that wants the more generic name.

# oily/container/Containerfile

from alpine:latest

ADD setup-build-env.sh /bin/setup-build-env.sh

RUN setup-build-env.sh

USER packager

WORKDIR /home/packager

VOLUME ["/home/packager/aports"]

ENTRYPOINT ["/home/packager/aports/oily/container/package.sh"]

This file does not do much:

- Start from an Alpine linux base container

- Run the

setup-build-env.sh - Set some variables on how a container with this image should be executed

The (not so) hard work is in the setup-build-env.sh. It downloads and installs Oils (which is in the testing repository), creates a packager user, gives it the required permissions and creates some folders/softlinks:

# oily/container/setup-build-env.sh

# oils-* is only in the testing repos...

echo https://dl-cdn.alpinelinux.org/alpine/edge/testing >> /etc/apk/repositories

apk add abuild abuild-rootbld doas build-base alpine-sdk oils-for-unix oils-for-unix-binsh oils-for-unix-bash lua-aports

# Delete the testing repo again

sed -i '/testing/d' /etc/apk/repositories

# wheel group should be able to run `doas` without password

echo 'permit nopass :wheel' > /etc/doas.conf

adduser -Du 1000 packager

adduser packager abuild

adduser packager wheel

mkdir -p /home/packager/aports

# Create softlinks to keep artifacts

ln -s /home/packager/aports/oily/abuild /home/packager/.abuild

ln -s /home/packager/aports/oily/logs /home/packager/logs

ln -s /home/packager/aports/oily/packages /home/packager/packages

chown -R packager /home/packager

# Remove the cache of installed packages to make the container smaller

apk cache clean --purge

Since we will mount our repository with VOLUME ["/home/packager/aports"], anything put inside of that folder while in the container will actually be stored in the repository and thus available outside of the container.

The softlinks make sure later generated signing key, logs and built packages will be stored in the repo.

The Oils Package

My script installs 3 oils-* packages:

- oils-for-unix

- oils-for-unix-binsh

- oils-for-unix-bash

This is because I was very considerate when creating the Aport definition:

The package oils-for-unix creates the actual binary /usr/bin/oils-for-unix and 2 symlinks /usr/bin/osh and /usr/bin/ysh, both pointing to /usr/bin/oils-for-unix. Executing osh will start a bash-compatible shell, while ysh starts Oils with all the feature flags for a modern shell enabled.

This usage of symlinks to change behaviour is nothing special. When executed via the symlink, the ARGV[0] of the executable is set to the name of the symlink. The executable can then use this to change its behaviour. Probably the most famous binary doing this is busybox, which supports many coreutils tools like cp, ls and even grep and awk - all in a single binary. And while we’re at it, Busybox also provides the default shell in Alpine Linux that way. The package busybox-binsh installs the softlink /bin/sh -> /bin/busybox. Oils uses this feature to either start in POSIX or Ysh “compatibility modes”. |

The only content of the package oils-for-unix-binsh is a symlink /bin/sh -> /usr/bin/oils-for-unix. It also provides the additional “virtual package” called /bin/sh, a path. This is probably the weirdest package name I’ve ever seen!

What's a virtual package?A “virtual package” is like a group of packages all providing the same or similar function. These packages usually install exactly the same file and therefore can’t be installed at the same time. That’s the case withbusybox-binsh and oils-for-unix-binsh, which both provide the file (and virtual package!) /bin/sh.

So if I do apk add /bin/sh I tell apk that I don’t care about the exact package, as long as there is some package which provides the virtual package /bin/sh. It will install busybox-binsh (if not yet installed) because this has the highest provider_priority (100). My oils-for-unix-binsh will be ignored, because it has only a priority of 10:

/bin/sh - run the command apk add oils-for-unix-binsh, it will first remove busybox-binsh and then install oils-for-unix-binsh.

|

oils-for-unix-bash is like to the ...-binsh package, except it conflicts with the bash package instead of busybox-binsh, as it creates a symlink /bin/bash -> /usr/bin/oils-for-unix. We could provide a virtual package /bin/bash to solve this conflict. But I learned that both packages implicitly provide the virtual package cmd:bash. This is because they install an executable file bash, and apk seems to create a virtual package for each file in /bin/.

I’m honestly not sure why we had to use the /bin/sh virtual package previously, when there’s already a cmd:sh package. I assume this is for historical reasons. |

Signing Packages

Remember the last line in the Containerfile?:

ENTRYPOINT ["/home/packager/aports/oily/container/package.sh"]

It points to a script which should be executed when we start the container. This script does only 2 things:

# oily/container/package.sh

# Generate or reuse an existing apk signing key

../setup-key.sh

# Build all packages

buildrepo -k -l "$HOME/logs" ./main

buildrepo -k -l "$HOME/logs" ./community

buildrepo -k -l "$HOME/logs" ./testing

The buildrepo script is a (shell) script written by the Alpine folks to build a whole set of packages from the aports code repository. Alpine linux knows 3 different “official” package sets, and for each repository there is a separate folder in the repository. This path is just given to the buildrepo command as the last argument.

And the setup-key.sh file is a short, funny script that I wrote:

# oily/setup-key.sh

source "$HOME/.abuild/abuild.conf" || true

# generate a key if not existent

if [ -z "$PACKAGER_PRIVKEY" ]; then

echo "generating new key and configfile"

abuild-keygen -na --install

else

echo "using $PACKAGER_PRIVKEY"

doas cp "$PACKAGER_PRIVKEY".pub /etc/apk/keys

fi

So first it tries to source the file $HOME/.abuild/abuild.conf (which, with the symlink, should reside in the repository under oily/abuild).

That file, when created, looks something like this:

# oily/abuild/abuild.conf

PACKAGER_PRIVKEY="/home/packager/.abuild/-68ce4406.rsa"

It has the file ending conf, but it is just a shell script in disguise. :)

(You can ignore the weird rsa key name. We don’t care about that).

So when config file doesn’t exist yet, it generates both a key and a matching config file.

If a file already exists, it copies the pubkey to the folder /etc/apk/keys.

Copying the key to /etc/apk/keys is not necessary. When abuild finishes a package build, it signs the package and puts it into $HOME/packages.

Now if we want to install that generated package, it fails:

apk will compare the signing key with the keys in the folder /etc/apk/keys. If it doesn’t have the key, then it’s considered untrusted.

So when we copy the key to /etc/apk/keys, apk knows that this package is trusted. We could also not copy the key and do apk --allow-untrusted.

|

For a bit of an overview, the file hierarchy of the git repo looks like this:

- oily-pine

# In the container this is /home/packager/aports/- main/

# package definitions of the main repo - community/

# package definitions of the community repo - testing/

# package definitions of the testing repo - oily/

- container.sh

# Holds functions to build/run the container - container/

- Containerfile

- Dockerfile

# A softlink to the Containerfile so it works with Docker as well - setup-build-env.sh

- package.sh

- abuild/

# Keeps the abuild.conf file and key between executions - logs/

# build logs - packages/

# finished packages. We could also throw them away...

- container.sh

- main/

Building Packages

The only missing piece from the folder structure is the code to actually build and run a container!

# simplified version of oily/container.sh

cd $GIT_ROOT

build() {

podman build --network=host oily/container/ -t oily-pine-builder

}

package() {

podman run --rm -v ./:/home/packager/aports oily-pine-builder

}

I had some issues with IPv4/6, so I told podman to use the host network with --network=host.

And since we’ll store all outputs in the repository, we can use --rm to remove the container right away. We only need to make sure we mount the current folder properly.

Let’s build and run that Container!

$ ./oily/container.sh build

STEP 1/7: FROM alpine:latest

STEP 2/7: ADD setup-build-env.sh /bin/setup-build-env.sh

STEP 3/7: RUN setup-build-env.sh

...

COMMIT oily-pine-builder

--> 1fed23b134a1

Successfully tagged localhost/oily-pine-builder:latest

1fed23b134a19c77aae25b0591f948d803218cbaa31ada757eb99d3688515ffa

$

$ ./oily/container.sh package

/home/packager

using /home/packager/.abuild/-682737bd.rsa

pigz: not found

1/1579 1/1580 main/acf-jquery 0.4.3-r2

oils: PID 238 exited, but oils didn't start it

oils: PID 258 exited, but oils didn't start it

oils: PID 263 exited, but oils didn't start it

oils: PID 265 exited, but oils didn't start it

oils: PID 261 exited, but oils didn't start it

2/1579 2/1580 main/zlib 1.3.1-r2

oils: PID 594 exited, but oils didn't start it

3/1579 3/1580 main/perl 5.40.2-r0v

4/1579 4/1580 main/linux-headers 6.14.2-r0

...

18/1579 18/1580 main/expat 2.7.1-r0

ERROR: expat: Failed to build

19/1579 18/1580 main/gdbm 1.24-r0

...

WE’RE BUILDING!

Now there were A LOT of these oils: PID xxx exited, but oils didn't start it warnings, but (most of) the packages built successfully. :)

The above snippet also shows how it looks when the package expat fails.

And with that we have the baseline to build all alpine Linux packages! Or so I thought :)

Doing A First Build

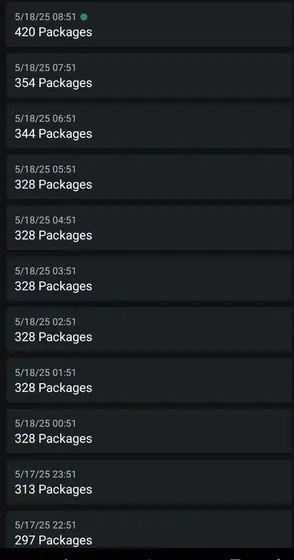

Putting this all together required quite some time, so when it first ran, I was very excited. I was so excited, that I wrote a script which monitored the output and sent me an hourly update to my phone with ntfy:

IIRC it was compiling GCC between 00:00-06:00 that day, which just took some time on my very old server.

IIRC it was compiling GCC between 00:00-06:00 that day, which just took some time on my very old server.

The builds ran and ran and ran. They had to be restarted a few times due to different minor issues like a package causing an actual infinite loop. At some point I ran it 3 times with a slight adjustment to build main, test and community at in parallel instead of serially. buildrepo has that convenient characteristics that when a build successfully finishes, it doesn’t build it again in a future run. So I could just restart the script whenever I wanted and it would only retry the failed packages.

After about 10 days the allocated 500GB disk filled up with 11999 built .apk files, and 5327/6500 successful Aports.

An interesting sidenote: It wasn’t the sucessfully built packages filling up the disk! Whenever a build failed, the source files were not deleted. This could be the unpacked source tarball, generated intermediate files as well as binaries, depending on the package and where it failed. The built apks themselves were only a few GB in size. |

82% success rate in a first attempt isn’t all too bad. I hoped for 95% (mainly because it looked like that for the first ~1000 builds), but I think it’s OK given that a single bug could affect many packages.

Coming Up…

And that’s it for this article. I showed you how to build Alpine Linux packages and even showed how I did a first build.

The next article in this series will go into detail on what I did with the 1173 failed packages.